How Does Your AI Work? Nearly Two-Thirds Can’t Say, Survey Finds

(Lightspring/Shutterstock)

Nearly two-thirds of C-level AI leaders can’t explain how specific AI decisions or predictions are made, according to a new survey on AI ethics by FICO, which says there is room for improvement.

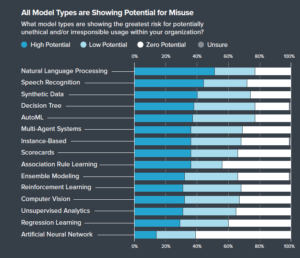

FICO hired Corinium to query 100 AI leaders for its new study, called “The State of Responsible AI: 2021,” which the credit report company released today. While there are some bright spots in terms of how companies are approaching ethics in AI, the potential for abuse remains high.

For example, only 22% of respondents have an AI ethics board, according to the survey, suggesting the bulk of companies are ill-prepared to deal with questions about bias and fairness. Similarly, 78% of survey-takers say it’s hard to secure support from executives to prioritize ethical and responsible use of AI.

More than two thirds of survey-takers say the processes they have to ensure AI models comply with regulations are ineffective, while nine out of 10 leaders who took the survey say inefficient monitoring of models presents a barrier to AI adoption.

There is a general lack of urgency to address the problem, according to FICO’s survey, which found that, while staff members working in risk and compliance and IT and data analytics have a high rate of awareness of ethics concerns, executives generally are lacking awareness.

Government regulations of AI have generally trailed adoption, especially in the United States, where a hands-off approach has largely been the rule (apart from existing regulations in financial services, healthcare, and other fields).

Seeing as how the regulatory environment is still developing, it’s concerning that 43% of respondents in FICO’s study found that “they have no responsibilities beyond meeting regulatory compliance to ethically manage AI systems whose decisions may indirectly affect people’s livelihoods,” such as audience segmentation models, facial recognition models, and recommendation systems, the company said.

At a time when AI is making life-altering decisions for their customers and stakeholders, the lack of awareness of the ethical and fairness concerns around AI poses a serious risk to companies, says Scott Zoldi, FICO’s chief analytics officer.

“Senior leadership and boards must understand and enforce auditable, immutable AI model governance and product model monitoring to ensure that the decisions are accountable, fair, transparent, and responsible,” Zoldi said in a press release.

As AI adoption increases among companies, it will only have a bigger impact on people’s lives, says AI Truth founder and CEO Cortnie Abercrombie, who contributed to the report.

“Key stakeholders, such as senior decision makers, board members, customers, etc. need to have a clear understanding on how AI is being used within their business, the potential risks involved and the systems put in place to help govern and monitor it,” she stated in the press release. “AI developers can play a major role in helping educate key stakeholders by inviting them to the vetting process of AI models.”

As the old saying goes, with great power comes great responsibility, Zoldi points out. Considering the power that AI brings, it’s time for companies to bring the same level of responsibility and accountability to their AI processes.

You can download a copy of FICO’s report here.

Related Items:

To Bridge the AI Ethics Gap, We Must First Acknowledge It’s There

It’s Time to Implement Fair and Ethical AI

LinkedIn Unveils Open-Source Toolkit for Detecting AI Bias